We received a lot of support requests when first deploying the Qualys platform.

In this particular incident the question was as simple as they come, “Which tag should I use to get all of the systems in my scope?”

This isn’t an issue if all of the users systems are conveniently tagged as “users systems”. In some cases this actually works out really well. In larger implementations it can become difficult to assign a single or just a few tags to a user for them to use in their filters. Then the issue becomes, “how does the user know which tags to use?” This becomes very complex very quickly.

Qualys uses a system of tags to filter and sort assets according to various criteria. The combination of static tagging and various dynamic rule driven tagging options make the tagging engine a very powerful option when trying to carve up your data. One of the tagging options that is missing (as of this writing) was the wildcard, or “all systems”. This becomes an issue when you want to run a report and use tags as opposed to asset groups (which does have an “all” option).

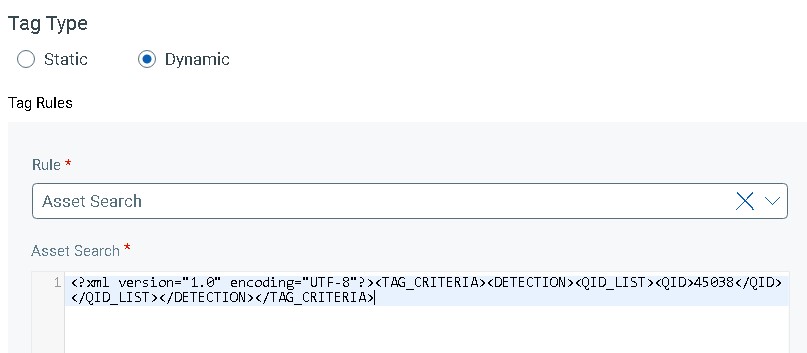

This led to the idea of using a common QID in an asset search dynamic tag to assign all scanned systems a tag that could be called “My Systems” addressing the requirement above.

First attempt was to take QID 45038 – Host scan time

<?xml version="1.0" encoding="UTF-8"?><TAG_CRITERIA><DETECTION><QID_LIST><QID>45038</QID></QID_LIST></DETECTION></TAG_CRITERIA>This was good but only captured systems that were touched by the IP scanner and excluded the agent instrumented systems that hadn’t been scanned for whatever reason.

To address this we added QID 45531: Host Scan Time – CloudAgent which has been broken out into its own QID to cover these assets. The updated query becomes:

<?xml version="1.0" encoding="UTF-8"?><TAG_CRITERIA><DETECTION><QID_LIST><QID>45531</QID><QID>45038</QID></QID_LIST></DETECTION></TAG_CRITERIAThis at least now provides a single tag that can be used for all scanned systems for use in reports and API calls. Let me know via the comments if you know of a better way!

If you have any questions or feedback you can reach me here or on Twitter @JaredGroves